Generating natural-looking digits with neural networks

< change language

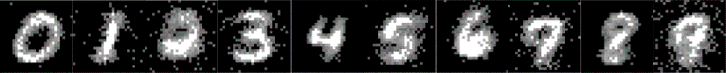

In this post I will show you how I got a neural network to write digits that look like they've been handwritten. In fact, the digits you can see in the first image of this post where generated by said neural network. The code that did all this is here.

It all started when I read, some time ago, about this funny way in which you could use neural networks: you are going to train a neural network to take a vector of size $N$ as input, and you are going to teach your network to do nothing. That is, you are going to teach your neural network to behave as the identity function... but with a twist! If your input size is $N$, somewhere in the middle of the network you want to have a layer with less than $N$ neurons! If this happens, you can think that your vector $x$ that is going in, first has to be squeezed through that tight layer, and then it expands again to be the output of the network.

If now you slice your network in half, the part of the network from the input up until the "squeeze it tight" part, compresses your inputs. The second half, from the "squeeze it tight" part until the output, decompresses your data (for those of you interested in looking this up, I think the correct name for this network is "encoder", but I am not sure).

Let that sink in for a second.

Now that I "know" how to compress things, imagine this scenario: I teach a neural network to compress digit images into a vector of size $10$ (one component per existing digit), and let us call

After doing that for a bit, say I take this image of a $2$:

If I feed that image into my

If now I take that vector and feed it into the

Looks good, right? Of course it is not exactly the same, but take a second to appreciate that the original image was $28 \times 28$, hence had $784$ values; those $784$ values were compressed into the $10$ numbers you saw above and then those $10$ numbers were used to reconstruct this beautiful $2$.

Of course that to build this $2$ we had to first have a $2$ go through the

One possible first idea would be to feed the

Using that $5$ times for each digit, I was able to create these "handwritten" digits:

It is true that they look practically the same, but they are not!

Then I looked at those pictures, chose one digit out of each row, and used some basic thresholding to try and set the background to black and the number to white, to see if I could make the digits more easily read. This is what I ended up with:

which is not too shabby, in my opinion.

As future work, there is still everything to be done! Trying different neural network architectures, studying if the accuracy of the

All this was written in a Jupyter notebook that you can find here. I used

It all started when I read, some time ago, about this funny way in which you could use neural networks: you are going to train a neural network to take a vector of size $N$ as input, and you are going to teach your network to do nothing. That is, you are going to teach your neural network to behave as the identity function... but with a twist! If your input size is $N$, somewhere in the middle of the network you want to have a layer with less than $N$ neurons! If this happens, you can think that your vector $x$ that is going in, first has to be squeezed through that tight layer, and then it expands again to be the output of the network.

If now you slice your network in half, the part of the network from the input up until the "squeeze it tight" part, compresses your inputs. The second half, from the "squeeze it tight" part until the output, decompresses your data (for those of you interested in looking this up, I think the correct name for this network is "encoder", but I am not sure).

Let that sink in for a second.

Now that I "know" how to compress things, imagine this scenario: I teach a neural network to compress digit images into a vector of size $10$ (one component per existing digit), and let us call

encoder to this network. To train the encoder, all I have to do is effectively teach the network to classify the images of the digits. After training the encoder, I will create a new network (let's call it decoder) that takes $10$ values as input, and returns an image as output. Now I will teach the decoder to undo whatever the encoder does.After doing that for a bit, say I take this image of a $2$:

If I feed that image into my

encoder, this is what comes out:

tensor([[-3.2723, 3.7296, 9.6557, 4.9145, -8.6327, -3.1320,

-3.5934, 6.0783, -0.8307, -5.3434]])If now I take that vector and feed it into the

decoder, this is the image that comes out:Looks good, right? Of course it is not exactly the same, but take a second to appreciate that the original image was $28 \times 28$, hence had $784$ values; those $784$ values were compressed into the $10$ numbers you saw above and then those $10$ numbers were used to reconstruct this beautiful $2$.

Of course that to build this $2$ we had to first have a $2$ go through the

encoder, but I don't really like that step. What I had envisioned was a system that only made use of a single network.One possible first idea would be to feed the

decoder a vector of zeroes with a single $1$ in the right place. The only reason that does not work so well is because the encoder doesn't really compress the images to such nice-looking vectors, so the decoder doesn't really know how to handle that. Instead, I have a little helper function that creates a random vector of size $10$, where each entry is randomly chosen in $[-2, 1]$, except for the coordinate that corresponds to the digit I want to create: that one is a random number in $[13, 14]$.Using that $5$ times for each digit, I was able to create these "handwritten" digits:

It is true that they look practically the same, but they are not!

Then I looked at those pictures, chose one digit out of each row, and used some basic thresholding to try and set the background to black and the number to white, to see if I could make the digits more easily read. This is what I ended up with:

which is not too shabby, in my opinion.

As future work, there is still everything to be done! Trying different neural network architectures, studying if the accuracy of the

encoder is very important or not, finding more clever ways of giving the input to the decoder, finding more clever ways of enhancing the image produced by the decoder, trying to understand if it is possible for the network to generate more distinct digits, etc.All this was written in a Jupyter notebook that you can find here. I used

pytorch for this, so if you want to run it you'll need to have that. Otherwise, you can still look at the pictures and check the specifications of what I did, and try to reproduce this with your own packages and whatnot.

TL;DR the images throughout this post are of digits that were generated by a neural network.

Neste post vou mostrar como é que treinei uma rede neuronal para desenhar dígitos que parecem manuscritos. Na verdade, os dígitos que vêem no início do post foram desenhados pela tal rede neuronal. Todo o código que diz respeito a esta experiência está aqui.

Tudo começou quando eu li, há algum tempo atrás, sobre uma utilização engraçada que se podia dar às redes neuronais: treinamos uma rede neuronal (com $N$ inputs) para ser a função identidade, uma rede que devolve exatamente aquilo que recebeu. No entanto, no meio da rede neuronal pômos uma camada com tamanho menor que $N$. Se fizermos isto, então o que a rede neuronal está a fazer é a espremer o input pela camada que é mais apertada, e depois a expandi-lo de novo.

Se separarmos agora a nossa rede neuronal, a metade que está à esquerda da camada mais apertada serve para comprimir os nossos inputs. A segunda metade, à direita da camada mais apertada, é a metade da rede que expande os inputs depois de eles terem sido espremidos, i.e. é uma rede que descomprime (para os mais curiosos, acho que isto são "encoders" no mundo das redes neuronais, mas não tenho a certeza).

Vamos deixar a ideia repousar por um segundo.

Agora que "sabemos" comprimir coisas, imaginemos o seguinte: vou ensinar uma rede neuronal a comprimir imagens de dígitos em vetores de tamanho $10$ (uma coordenada no vetor por cada dígito que existe), e vamos dizer que essa rede se vai chamar

Depois de treinar as duas redes neuronais um bocado, imaginemos que pego nesta imagem de um $2$:

Se eu passar esta imagem pelo meu

Se agora eu pegar nesse vetor e o puser dentro do

Até tem bom aspeto, não? Claro que não são exatamente a mesma coisa, mas reparem que a imagem original tem $28 \times 28$ valores, $784$ pedaços de informação; esses $784$ valores foram espremidos para apenas $10$ valores, e desses $10$ valores o

Claro que para construir este $2$ eu tive de fazer uso do

Uma possível ideia seria alimentar o

Usando essa função $5$ vezes para cada dígito, criei estas imagens:

As imagens são bastante semelhantes, e isso é um problema que vou tentar apresentar como resolvido num post futuro, mas não são exatamente iguais!

Depois de olhar para as figuras, para cada conjunto de $5$ imagens do mesmo dígito, escolhi uma com bom aspeto e tentei usar umas regras básicas para eliminar o ruído a cinzento claro no fundo de cada imagem. Depois desse passo intermédio, acabei com isto:

O que, na minha opinião, não é terrível.

No futuro, há muito que pode ser feito ainda: tentar arquiteturas de redes neuronais diferentes, estudar se a precisão do

Este código foi todo escrito num notebook Jupyter que podem encontrar aqui. Usei o

Tudo começou quando eu li, há algum tempo atrás, sobre uma utilização engraçada que se podia dar às redes neuronais: treinamos uma rede neuronal (com $N$ inputs) para ser a função identidade, uma rede que devolve exatamente aquilo que recebeu. No entanto, no meio da rede neuronal pômos uma camada com tamanho menor que $N$. Se fizermos isto, então o que a rede neuronal está a fazer é a espremer o input pela camada que é mais apertada, e depois a expandi-lo de novo.

Se separarmos agora a nossa rede neuronal, a metade que está à esquerda da camada mais apertada serve para comprimir os nossos inputs. A segunda metade, à direita da camada mais apertada, é a metade da rede que expande os inputs depois de eles terem sido espremidos, i.e. é uma rede que descomprime (para os mais curiosos, acho que isto são "encoders" no mundo das redes neuronais, mas não tenho a certeza).

Vamos deixar a ideia repousar por um segundo.

Agora que "sabemos" comprimir coisas, imaginemos o seguinte: vou ensinar uma rede neuronal a comprimir imagens de dígitos em vetores de tamanho $10$ (uma coordenada no vetor por cada dígito que existe), e vamos dizer que essa rede se vai chamar

encoder. Para treinar a minha rede encoder posso ensiná-la a classificar imagens dos $10$ dígitos. Depois de treinar o encoder, treino outra rede neuronal (a que vou chamar decoder) cujo objetivo vai ser desfazer tudo o que o encoder fizer.Depois de treinar as duas redes neuronais um bocado, imaginemos que pego nesta imagem de um $2$:

Se eu passar esta imagem pelo meu

encoder, o resultado que obtenho é este:

tensor([[-3.2723, 3.7296, 9.6557, 4.9145, -8.6327, -3.1320,

-3.5934, 6.0783, -0.8307, -5.3434]])Se agora eu pegar nesse vetor e o puser dentro do

decoder, obtenho esta imagem:Até tem bom aspeto, não? Claro que não são exatamente a mesma coisa, mas reparem que a imagem original tem $28 \times 28$ valores, $784$ pedaços de informação; esses $784$ valores foram espremidos para apenas $10$ valores, e desses $10$ valores o

decoder conseguiu retirar esta representação do $2$.Claro que para construir este $2$ eu tive de fazer uso do

encoder primeiro, mas não é assim que eu quero que isto funcione. Depois de usar o encoder para treinar o decoder, quero poder deitá-lo fora!Uma possível ideia seria alimentar o

decoder com um vetor de zeros, a menos de uma única coordenada onde estaria um $1$. O único motivo pelo qual isso não funciona tão bem é porque o encoder nunca comprimiu nenhuma imagem para um vetor tão lindinho, e portanto o decoder provavelmente não há de saber como descomprimir esse vetor. Para contornar este problema, arranjei uma função auxiliar que devolve um vetor de tamanho $10$, onde $9$ das coordenadas são escolhidas aleatoriamente em $[-2, 1]$ e a coordenada que diz respeito ao dígito que quero criar é um número aleatório em $[13, 14]$.Usando essa função $5$ vezes para cada dígito, criei estas imagens:

As imagens são bastante semelhantes, e isso é um problema que vou tentar apresentar como resolvido num post futuro, mas não são exatamente iguais!

Depois de olhar para as figuras, para cada conjunto de $5$ imagens do mesmo dígito, escolhi uma com bom aspeto e tentei usar umas regras básicas para eliminar o ruído a cinzento claro no fundo de cada imagem. Depois desse passo intermédio, acabei com isto:

O que, na minha opinião, não é terrível.

No futuro, há muito que pode ser feito ainda: tentar arquiteturas de redes neuronais diferentes, estudar se a precisão do

encoder a classificar é importante ou não, encontrar maneiras mais inteligentes de dar input ao decoder, encontrar maneiras mais inteligentes de melhorar a imagem devolvida pelo decoder, tentar entender se é possível que a rede neuronal gere dígitos mais distintos, etc.Este código foi todo escrito num notebook Jupyter que podem encontrar aqui. Usei o

pytorch para estas experiências, portanto hão de precisar dele para correr o meu código. Caso contrário, podem ver os bonecos que fiz e tentar reproduzir (e melhorar!) os meus resultados com outra livraria.

TL;DR as imagens espalhadas pelo post são de dígitos desenhados por uma rede neuronal.

- RGS